Fall 2019/2020, 0368-4010-01

Course hours: Monday, 16:00-19:00

Recitations: Monday, 19:00-20:00

Location: Sherman 003

Instructor: Dan Halperin, danha AT post tau ac il

Office hours by appointment

Teaching assistant: Michal Kleinbort, balasmic at post tau ac il

Office hours by appointment

Grader: Yair Karin, yair dot krn at gmail com

Software helpdesk: Nir Goren, nirgoren at mail tau ac il, Office hours by appointment

The recent years have seen an outburst of new robotic applications and robot designs in medicine, entertainment, security and manufacturing to mention just a few areas. Novel applications require ever more sophisticated algorithms. In the course, we shall cover computational and algorithmic aspects of robotics with an emphasis on motion planning.

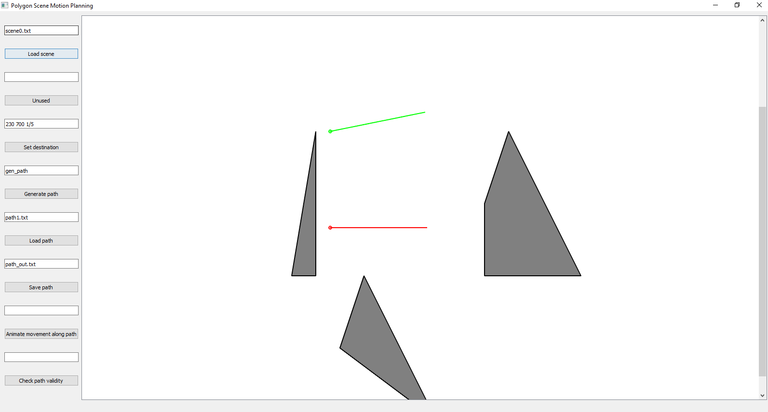

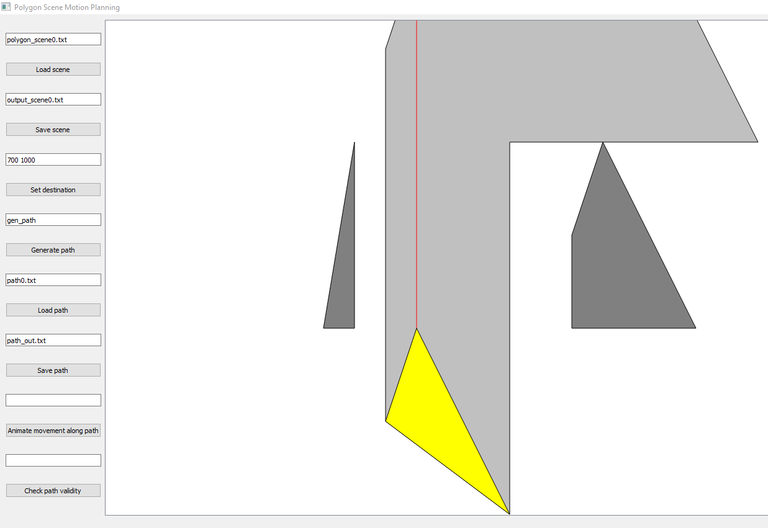

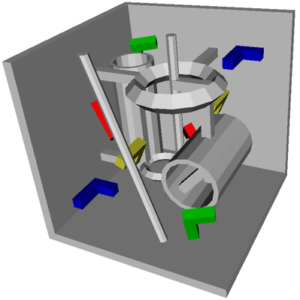

The motion-planning problem is a key problem in robotics. The goal is to plan a valid motion path for a robot (or any other mobile object for that matter) within a given environment, while avoiding collision with obstacles. The scope of the motion-planning problem is not restricted to robotics, and it arises naturally in different and diverse domains, including molecular biology, computer graphics, computer-aided design and industrial automation. Moreover, techniques that were originally developed to solve motion-planning problems have often found manifold applications.

The topics that will be covered include (as time permits):

- A brief tour of algorithmic problems in robotics

- The configuration space approach and the connection between motion planning and geometric arrangements

- Minkowski sums; exact and efficient solution to translational motion planning in the plane

- Translation and rotation in the plane; translational motion of polyhedra in space

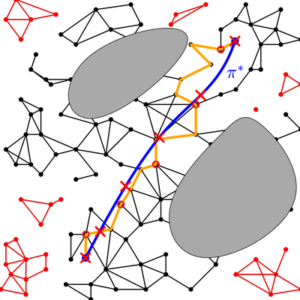

- Sampling-based motion planning

- Collision detection and nearest-neighbor search

- Path quality: shortest paths, high clearance paths, other quality measures, multi-objective optimization

- Direct and inverse kinematics: from industrial manipulators to proteins

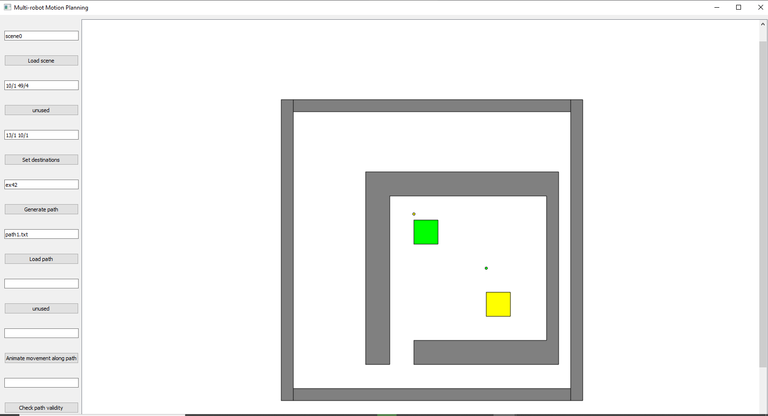

- Multi-robot motion planning

Prerequisites

The course is geared towards graduate students in computer science. Third-year undergrads are welcome; in particular, the following courses are assumed: Algorithms, Data structures, Software1.

The final grade

Homework assignments (40%), brief talk in class on a topic of the student’s choice (subject to approval) (10%), final project (50%).

A short bibliography:

Assignments

- Assignment no. 1 file , due: November 11th, 2019, additional information.

- Assignment no. 2 file , due: December 9th December 2nd, 2019, additional information.

- Assignment no. 3 file , due: December 31st , 2019, additional information. Notice that Ex. 3.4 is a theory exercise.

- Assignment no. 4 file , due: January 23rd, 2020, Ex. 4.4 is due January 16th 2020, additional information.

Guest Lectures

- Guy Hoffmnan, Cornell, 23.12.19: Designing Robots and Designing with Robots: New Strategies for an Automated Workplace

- Ilana Nisky, BGU, 6.1.20: Haptics for the Benefit of Human Health [slides]

- Aviv Tamar, Technion, 2.12.19: Machine Learning in Robotics

- Lior Zalmanson, TAU, 25.11.19: Trekking the Uncanny Valley — Why Robots Should Look Like Robots?

Mini talks by students

- Golan Levy, 4.11.19: How Do Robots See? On Lidar and Other Detection Systems [slides]

- Itay Yehuda, 11.11.19: Minimizing Radioactive Influence, or How to Balance Length and Clearance [slides]

- Tom Verbin, 18.11.19: Rotations in Space with Quaternions [slides]

- Dror Dayan and Nir Goren, 18.11.19: Efficient Algorithms for Optimal Perimeter Guarding [slides]

- Dor Israeli, 9.12.19: Friendly Introduction to Robotic Estimations Using Kalman Filters [slides]

- Adar Gutman, 9.12.19: Complexity of the Minimum Constraint Removal Problem [slides]

- Tal Eisenberg, 9.12.19: Neural Prosthetics: From Brain Activity to Robotic Motion and Back

- Inbar Ben david, 16.12.19: A Brief Introduction to ROS

- Or Yarinitzky and Liran Zusman, 16.12.19: Robotics StackExchange: Efficient, High-Quality Stack Rearrangement

- Or Perel, 16.12.19: An Arm and a Leg: Humanoid Robots Design

- Yossi Khayet, 23.12.19: Robots and Chess: from The Turk to beating Grandmasters

- David Krongauz, 23.12.19: Foraging in Swarm Robotics: Inspired by Ants, Implemented by Robots

- Guy Korol, 30.12.19: Soft Robotics: Designing Robotic Actuators Made from Flexible Materials [slides]

- Harel Oshri, 30.12.19: Computational Interlocking Furniture Assembly

- Nadav Ostrovsky, 30.12.19: Collisions as Information Source in Multi-Robot Systems

- Nave Tal, 30.12.19: Tabletop Rearrangements with Overhead Grasps [slides]

- Netanel Fried, 30.12.19: Using Simple Robots in Challenging Terrains

- Tomer Davidor, 30.12.19: Using Game Theory for Autonomous Race Cars

- Eitan Niv, 13.1.20: Locomotion Algorithms for Snake Robots

- Lana Salameh, 13.1.20: Nano robotics – Automatic Planning of Nanoparticles Assembly Tasks

- Doron Kopit, 13.1.20: Controlling a Swarm: Centralized vs Decentralized

- Dan Flomin, 13.1.20: Tasty Robots: Capsule Robotics in Gastroenterology Medicine

- Abed Khateeb, 20.1.20: Low-cost Aggressive Off-Road Autonomous Driving

Course summary

- 28.10.19

Introduction [pdf]Recitation 1: Intersection of half-planes [pdf]

- 4.11.19

Robots with 2 dofs and two-dimensional state spaces

Disc among discs [slides], proof of the combinatorial bound [pdf]

Minkowski sums and transnational motion planning [slides, up till Slide #15]Recitation 2: Plane sweep for line segment intersection. [pdf, the slides are by Marc van Kreveld] - 11.11.19

Minkowski sums, continued [slides, #15-39]

Motion planning and geometric arrangements, general exposition [slides, up till Slide #13]Recitation 3: DCEL and map overlay. [pdf, the slides are by Marc van Kreveld]

Python code demonstrating Minkowski sum computation and Map overlay (developed by Nir Goren), requires the Python bindings for CGAL - 18.11.19

Motion planning and geometric arrangements, continued: general algorithms [slides]

Piano Movers I, translating and rotating a segment in the plane [slides, up till Slide #12]Recitation 4: Convex hull. [pdf, the slides are by Marc van Kreveld] - 25.11.19

Piano Movers I, cont’d [slides]

Trekking the Uncanny Valley — Why Robots Should Look Like Robots?, guest lecture by Lior ZalmansonRecitation 5: Rotational sweep, Kd-trees [pdf, the slides are by Marc van Kreveld] - 2.12.19

Sampling-based motion planning I: PRM [slides, up till Slide #29]

Machine Learning in Robotics, guest lecture by Aviv Tamar - 9.12.19

Sampling-based motion planning I: PRM, cont’d [slides, till the end]Recitation 7: Voronoi diagrams [pdf, the slides are by Marc van Kreveld]

- 16.12.19

Collision detection [slides, new version: Dec 23rd, 2019]

- 23.12.19

Sampling-based motion planning II: Single query planners and the RRT family [slides]Recitation 9: Sampling-based motion planning under kinodynamic constraints [pdf]

- 30.12.19

Path quality [slides , up till Slide #29]

- 6.1.20

Path quality, cont’d [slides, new version: Jan 5th. 2020]Recitation 10: RRT, RRT* [pdf]

-

13.1.20

Multi robot motion planning: Extended review [slides]